It’s been a whirlwind since Nvidia’s Gamescon conference, and this is one of the cases in technology where so many questions were answered, but so many new questions were raised. Nvidia’s GeForce RTX 20 series is very impressive on paper, but also rather expensive, with the RTX 2080 Ti retailing for $999 for a basic AIB variant of the card.

You would think then, given the pricing, Jensen Huang – Nvidia’s leather jacket wearing CEO, would have been very eager to point out the performance of the RTX 2080 Ti and the other SKUs… but this wasn’t the case. Much of the conference was focused on where computer graphics had come from (Jensen skipped over early cards such as the TNT series and started with the GeForce 256) and how fancy real time ray tracing was.

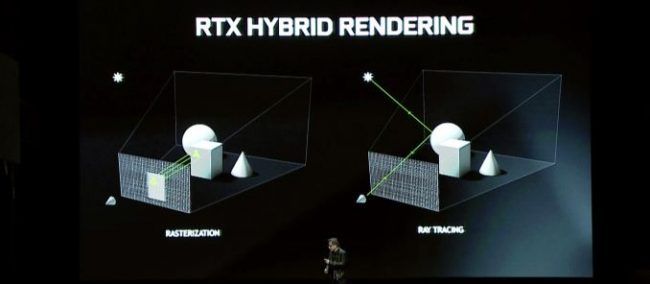

Real time ray tracing is now here, and ‘just works’ – phrases Jensen would consistently utter throughout the conference. And there’s few who’d deny the visuals on display were impressive – Metro Exodus, Battlefield, Shadow of the Tomb Raider – looked stunning, despite the techs fairly early implementation.

Starting things out with the ray tracing performance – Shadow of the Tomb Raider has had multiple reports that it struggled to hit 60 FPS (often in the mid 50s) with the pedestrian resolution of 1080P. But it’s important to take into consideration that the game isn’t launching with Ray Tracing support added, and it will come later via a post patch.

I’m not for one moment going to tell you that this means you’ll be getting a few percent fps dip with Ray Tracing on the final game. Instead, with such poor optimisation its hard to nail down performance.

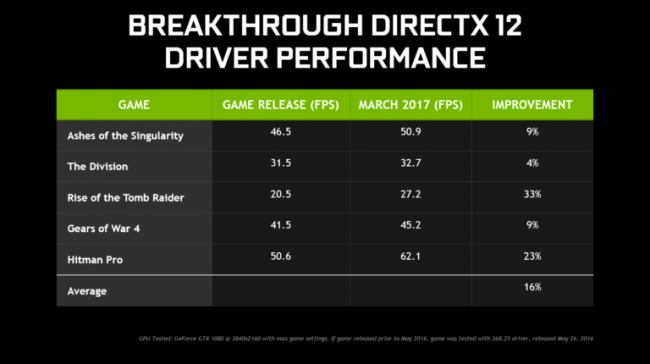

Think of this – Nvidia averaged a 16 percent improvement in performance on DX12 titles from the games release up until March 2017. And that was DX12 – which had been established and released, with games that were out, against previously WHQL drivers. We’re looking at early game code, on early drivers, with non finished SDKs.

With that said, do not go around thinking that you’ll be playing Shadow of the Tomb Raider on final release at 4K with 60FPS with ray tracing on. It’ll be expensive still, and how much of a performance cost it is remains to be seen. Just don’t take the early results as accurate is all I’m saying.

There’s also a rather interesting report from the folks over at TechRadar, and they backup Nvidia’s own claims. According to TR they played a variety of PC games and many of those games hit OVER 100 fps at 4K at max quality settings. They can’t reveal which games because of NDA, but they seemed rather impressed, but they also said that heat might have actually hurt the cards performance.

GPUs operate on base and boost frequencies, with heat and power (and GPU load) dictating how high a card boosts. So obviously cooler environments can equate to much higher clock frequencies and better FPS… but for whatever reason, Nvidia had the machines exhaust aiming at the next PCs intake fan. So in short, if you were half way down the line or further, the machine must have been operating a few degrees warmer than it should.

Nvidia’s own claims tell us a similar story – that today’s games will have no issue at 4K at 60FPS. “Turing’s fusing of advanced shaders, AI and ray tracing will deliver 60 FPS gaming in 4K HDR today, and is the platform for a new era of photorealism for fully ray-traced games.”

I do have a few issues with how Nvidia have handled things though, there should have been early benchmarks at the event – even if without ray tracing. Nvidia could have simply said “we’re still working on the drivers, so this is something that’ll get better over the weeks but here’s where we’re at right now” and simply show a few games running at high FPS.

Putting the cards up a month early for preorder also stung – Nvidia’s own website has started to run out of preorders. So if you wanted to wait and see (and who can blame you) then you’ll be waiting longer, or praying to the AIB gods that you can get a card.

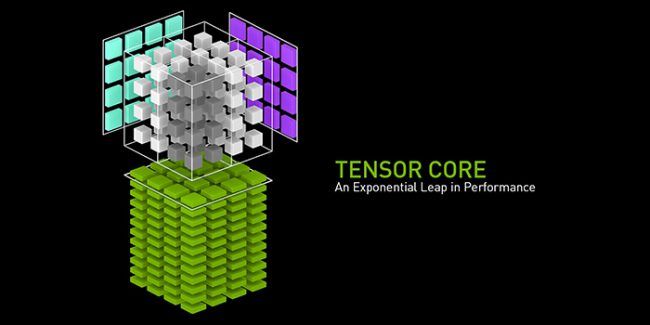

There’s also much made of Nvidia’s RTX-OPS. This is a figure Nvidia have created for the next generation, because it is a figure of performance they feel is more ‘appropriate’ for games of the future. It’s also a much more impressive figure, as we see that of course the older generation of GPUs weren’t blessed with Tensor cores or RT cores.

But yes, RT cores and AI cores can’t do a damn thing for traditional rendering applications. So they essentially sit there doing nothing while you’re playing an older game. But even so, this is a measurement Nvidia are using… will it catch on? Hmmm.

As for the Tensor Cores, I’d not be surprised if we see the Tensor Cores and Neural Networks be used for ingame AI. Don’t forget, we recently saw EA experiment with deep learning in Battlefield 1.

I’m sure this isn’t an accident, Nvidia and EA know what the other is up to. It’s not out the realms of possibility that we’ll soon see team mates in games using Tensor Cores to improve performance considerably for your AI team mates. The deep learning trained AI destroyed the traditional bots in BF1, and so imagine the possibility of this in games of the future.

Naturally, there’s the console ‘issue’ (ie, consoles don’t have this) so how this would be achieved in reality remains to be seen.